Instagram is set to use blurring tools to fight “sextortion.”

The firm will deploy the software within weeks after a spate of blackmail cases involving intimate pictures sent online and “revenge porn.”

It includes “nudity protection,” which blurs naked images in direct messages and will be turned on by default for users under 18 years old.

Instagram has branded sextortion a “horrific” crime. The platform has also pledged to direct potential victims to support systems if they fall victim to it.

Governments around the world have warned of the increasing threat to young people from sextortion, which typically involves targets being sent a nude picture before being invited to send their own in return.

They are then issued with a threat the image will be shared publicly unless they give in to the blackmailer’s demands, usually for a money transfer.

There are also cases of pedophiles using sextortion to coerce and abuse children.

But most of the sextortion cases are from organized criminal gangs intent on getting money from victims.

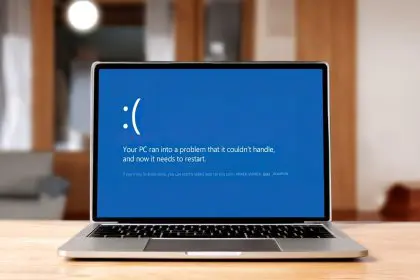

The new Instagram nudity protection system uses artificial intelligence running on users’ devices to detect nude images in direct messages and give them a choice whether or not to view them.

The company says it is designed “not only to protect people from seeing unwanted nudity in their DMs but also to protect them from scammers who may send nude images to trick people into sending their own images in return.”

Instagram’s upcoming system does not automatically report nude images to the company, but users will be reminded they can block and report accounts if they wish.

When the system detects naked images being sent, users will be directed to safety tips that will include a reminder recipients can screenshot or forward images without the sender’s knowledge.

Instagram also says it will share more data to fight sextortion and child abuse with other technology companies via an initiative called Lantern.